Deepseek R1 vs. Claude 3.5 Sonnet: A Developer’s Inspiring Journey 🚀

If you’ve ever wrestled with large language models (LLMs) for code reviews—or even just toyed with the idea—you’ve likely wondered: Is there a better way? After countless hours of sending prompts to ChatGPT over several months, one dedicated developer set out to find that better way, diving deep into the nitty-gritty of LLM-based code analysis. Their journey, documented on Reddit, led them to a striking conclusion: Deepseek R1 was a standout tool that uncovered more bugs, more consistently, than Claude 3.5 Sonnet.

🛠️ The Quest for an Efficient Code Review Tool

“Been working on evaluating LLMs for code review and wanted to share some interesting findings comparing Claude 3.5 Sonnet against Deepseek R1 across 500 real pull requests.”

Right from the start, the developer’s goal was clear: reduce complexity, save time, and catch critical issues before they derail production. Manually reviewing code for race conditions, type mismatches, and security loopholes can be tedious—and downright nerve-wracking. Here was a chance to see if LLMs could genuinely lift that burden.

🔍 Surprising Results & Key Insights

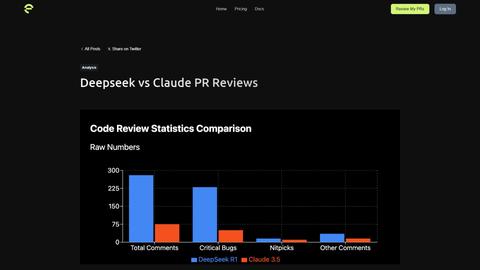

“The results were pretty striking: Claude 3.5: 67% critical bug detection rate, Deepseek R1: 81% critical bug detection rate (caught 3.7x more bugs overall).”

This quick snapshot shows how dramatically the tools differ. Here’s what stood out:

✅ Holistic Bug Detection: Deepseek R1 not only flagged immediate mistakes but also excelled at connecting subtle issues across multiple files.

✅ Higher Accuracy on Security Vulnerabilities: Security flaws are tricky—often buried in logic spanning different modules. Deepseek R1’s broader contextual understanding gave it the edge.

✅ Reduced Human Overhead: By automating checks for race conditions, type mismatches, and logic errors, developers could focus on building features rather than firefighting.

For busy engineering teams, these benefits translate to less time chasing elusive bugs and more time delivering quality software on schedule.

🌱 A Peek into the Developer’s Journey

“What surprised me most wasn’t just the raw numbers, but how the models differed in what they caught.”

Behind these stats lies a personal journey that started with a simple question: Could an AI truly act as a reliable code reviewer?

The developer spent hours, days, and ultimately months experimenting with both Claude 3.5 Sonnet and Deepseek R1, running them through 500 real pull requests. Unlike synthetic tests or curated examples, these PRs came from live production codebases—a testament to genuine, hands-on usage.

Each discovery—whether an edge case missed by one model or a surprising bug flagged by the other—paved the way for new insights. That persistence paid off, highlighting how different LLMs can vary dramatically in their approach to bug detection.

🙌 Addressing Your Pain Points with Deepseek R1

Ever spent hours debugging, only to realize you missed a hidden security flaw?

Or maybe you’ve had to comb through multiple repositories just to uncover a single race condition?

That’s exactly the type of developer headache Deepseek R1 aims to eliminate.

- Broad Context Analysis: Deepseek R1 tackles cross-file issues, spotting the one line of code in an otherwise unassuming file that could break your entire application.

- Time Efficiency: By automating routine checks, it saves you from the monotony of manual reviews, letting you focus on creative or high-priority tasks.

- Confidence in Production: With a higher detection rate for critical bugs (81%), you can merge pull requests with greater peace of mind.

⭐ Real-World Wins & Less Complexity

Imagine you’re working on a high-stakes e-commerce site. A single logic error in your payment module could lead to lost transactions or double charges. With Deepseek R1 scanning your code before each release, subtle logic oversights that slip past tired human eyes get flagged instantly.

Fewer frantic hotfixes, fewer production nightmares, and happier customers.

💡 Why It’s Worth Exploring

Whether you’re a senior developer juggling multiple projects or a solo founder building the next big app, reliable LLM-based code reviews can be a game-changer. As the developer behind this analysis learned, taking that first step—testing your code with different AI tools—can spark major improvements in workflow and product stability.

For more details on how Deepseek R1 stacks up, check out their comprehensive breakdown on the Entelligence.ai blog or dive into the full conversation on Reddit to see what other devs have to say. It might just be the spark you need to simplify your code reviews—and reclaim your valuable time.

🚀 Ready to Dive In?

A thousand-line code review starts with a single pull request. Deepseek R1 offers a blend of higher bug detection rates, real-world reliability, and time-saving features. If you’re looking for a nudge to level up your dev workflow, consider giving it a shot.

🔗 Relevant Links:

👉 Reddit Discussion

👉 Full Evaluation Report

Happy coding—and happier code reviewing! 🚀